Artificial intelligence (AI) is no longer just a buzzword—it’s rapidly entering a phase where multimodal capabilities, agentic functioning, and trust / regulation issues are front and center. As we progress through 2025, these shifts are transforming how people work, how businesses operate, and how society thinks about AI. Below, we explore the key dimensions of this change and what it means for everyone—from developers to end users.

1. Beyond text: The rise of multimodal AI

Historically, large language models (LLMs) dominated the conversation. But the next wave is multimodal AI—systems that handle text and voice, images, video, sensor data, physical interaction.

- For example: AI models that can look at a picture, hear audio, and then generate a response that combines those modalities.

- Sector-specific integration is accelerating: manufacturing, healthcare, robotics.

Why it matters: Much richer interactions become possible—imagine a virtual assistant that looks at your workspace via camera, hears your voice, and suggests actions based on both.

Keyword opportunities: multimodal AI, AI modalities, AI voice-image integration, multimodal models.

2. Agentic AI: More autonomy, more complexity

Another major trend is agentic AI—not just models that generate output, but systems that act on their own behalf, plan, adapt, and interact with the world.

- Instead of a chatbot answering your query, an agentic AI might book your flight, select your hotel, update your calendar, notify you of changes—all with minimal prompting.

- With greater power come greater risks: autonomy increases the chance of error, unexpected behaviour, or misuse.

Why it matters: For enterprises and end users alike, this means moving from assistive tools to active collaborators. The consequence is higher productivity—but also greater need for oversight.

Keyword opportunities: AI agents, agentic AI, autonomous AI systems, intelligent agents.

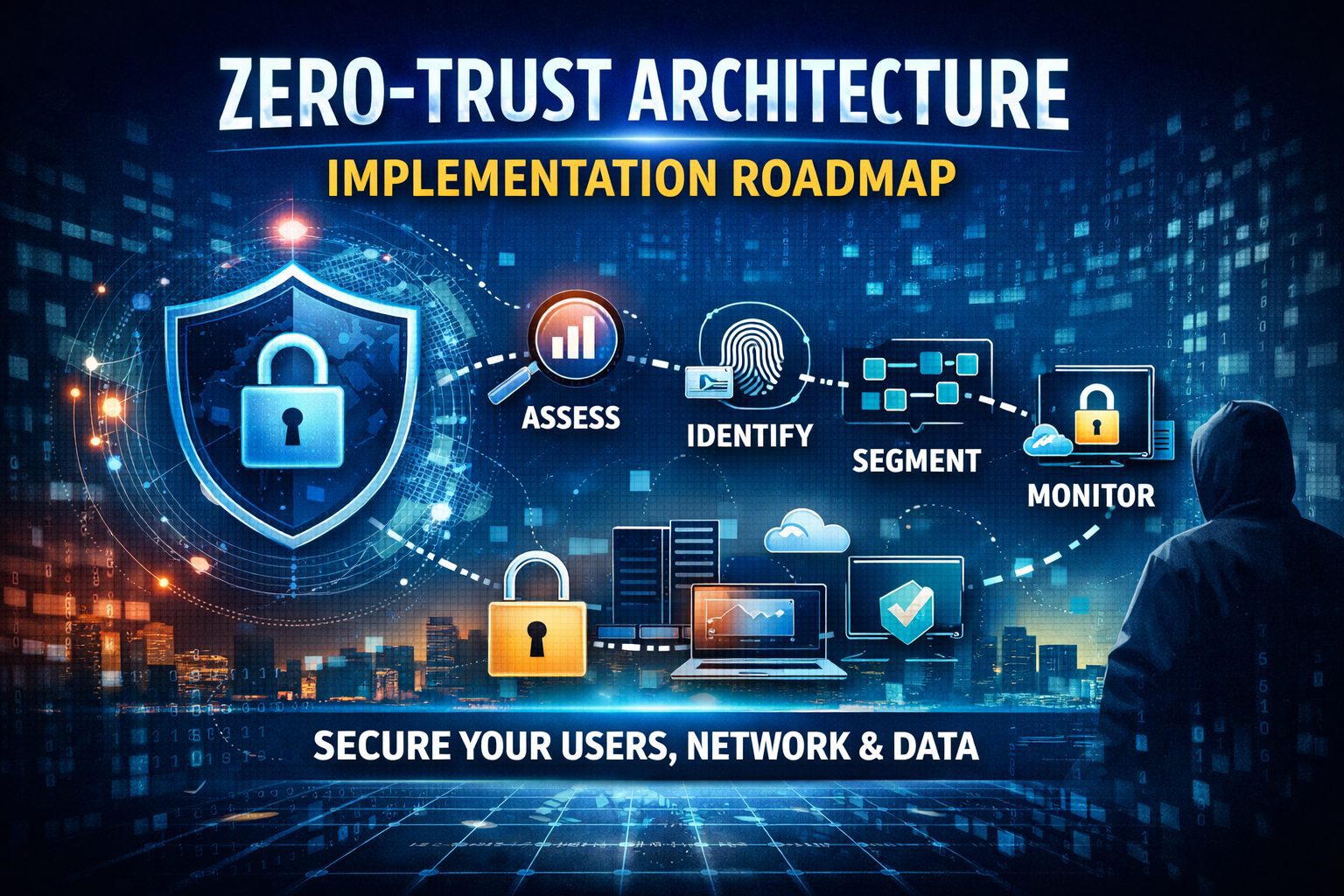

3. Shadow AI, Trust & Regulation

While innovation surges, three intertwined issues are becoming urgent: uncontrolled AI adoption (often called “shadow AI”), trust issues (can users distinguish AI-generated content?), and regulation catching up.

- Shadow AI: Employees or departments using unapproved AI tools without security or governance oversight.

- Trust / “AI trust paradox”: As AI gets better at mimicking human‐level text/audio/video, users struggle to know when something is AI-generated or accurate.

- Regulation & governance: The release of frameworks like the European AI Act signals that governments are stepping in to require transparency, accountability, and rights protection.

Why it matters: Without trust and governance, the benefits of AI can be undermined by misuse, bias, misinformation, security risks, or reputational damage.

Keyword opportunities: shadow AI, trusted AI, AI governance, AI regulation 2025.

4. Impact on Work, Industry & Skills

These advances aren’t happening in a vacuum—they are reshaping the workplace and economy.

- Automation is shifting from repetitive tasks to more complex, contextual work. Sources say AI could automate a significant chunk of tasks that take up 60-70% of employee time.

- Vertical AI integration: instead of generic models, we’re seeing AI tailored for healthcare, finance, manufacturing, etc.

- Skills: Demand grows for those who can interface with AI systems, interpret output, ensure governance, and build multimodal/agentic solutions.

Why it matters: Job roles will evolve. Organizations must prepare for not just adoption, but also change management, reskilling, and strategic oversight.

Keyword opportunities: AI adoption in workplace, vertical AI adoption, AI skills 2025, future of work AI.

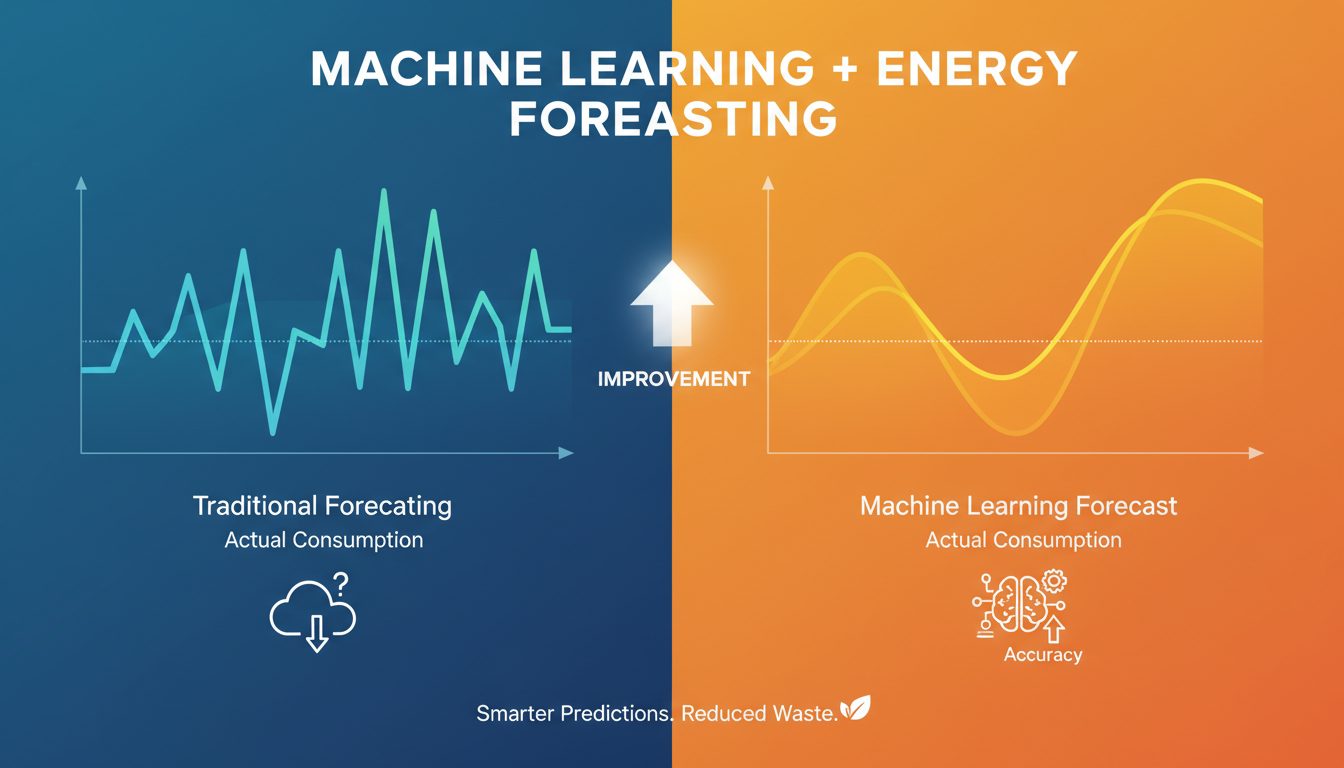

5. Sustainability & Ethical Boundaries

As AI infrastructure scales—data centres, compute, models—the environmental footprint and ethical implications become salient.

- The “environmental footprint of AI” is emerging as a key topic: doubling of data centres, energy/water concerns.

- Ethical issues: model bias, transparency, the risk of replacing human creativity, algorithmic injustice.

Why it matters: To build long-term trust and sustainable value, AI systems must be efficient, equitable, transparent. Stakeholders increasingly care about ethics and ESG in tech.

Keyword opportunities: sustainable AI, AI ethics 2025, environmental impact AI, fair AI models.

Conclusion

In 2025, the most compelling narrative in AI isn’t just “what can models do”, but “how they integrate, act, and are governed”. Multimodal systems and agentic agents are pushing the envelope. At the same time, companies and society grapple with trust, regulation, shadow adoption, and ethical & environmental impacts.

For businesses, developers, and end-users alike, the message is clear: adopt the power of AI—but do so intelligently. Build systems that are capable and responsible, robust and transparent, innovative and ethical.

Final thought: If you focus on one takeaway—make it this: AI in 2025 must act, integrate, and earn trust. Without all three, the promise alone isn’t enough.